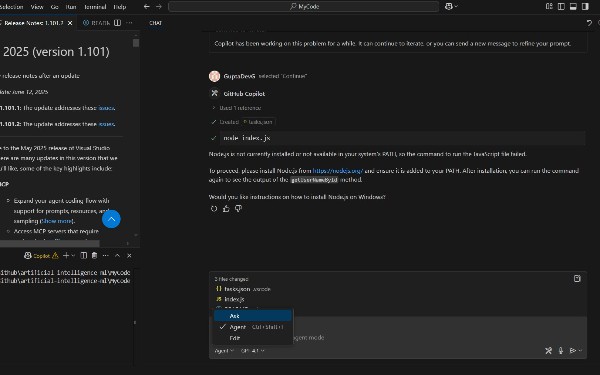

Benchmarking Storage Performance (Latency, Throughput) Using Python Benchmarking Storage Performance (Latency, Throughput) Using Python Aug 26th 2025, 20:00 by Arjun Mullick Understanding the performance of your AWS S3 storage specifically, how quickly you can read and write data is essential for both cost optimization and application speed. By running Python scripts that measure latency and throughput, you can compare different S3 storage classes, identify hidden bottlenecks, and make data-driven decisions about where and how to store your data. This article breaks down the fundamentals of S3 benchmarking, provides working Python examples, and shows how to interpret the results even if you're not a cloud infrastructure expert. |  From Simple Lookups to Agentic Reasoning: The Rise of Smart RAG Systems From Simple Lookups to Agentic Reasoning: The Rise of Smart RAG Systems Aug 26th 2025, 19:00 by Naresh Dulam Retrieval-Augmented Generation (RAG) is a technique in large language models (LLMs) that enhances text generation by incorporating external data retrieval into the process. Unlike traditional LLM usage that relies solely on the model's pre-trained knowledge, RAG allows an AI to "look things up" in outside sources during generation. This significantly improves the factual accuracy and relevance of responses by grounding them in real-time information, helping mitigate issues like hallucinations (fabricated or inaccurate facts) and outdated knowledge. In essence, RAG gives AI a dynamic memory beyond its static training data. However, the story of RAG doesn't end with the basic idea of retrieval + generation. Over time, a series of RAG architectures have emerged – each one introduced to solve specific shortcomings of the earlier approaches. What began as a simple concept has grown into a sophisticated ecosystem of patterns, each designed to tackle real-world challenges such as maintaining conversational context, handling multiple data sources, and improving retrieval relevance. In this article, we'll explore the major RAG architectures in an evolutionary sequence. We'll see how each new architecture builds upon and resolves the limitations of its predecessor, using visual diagrams to illustrate the problem each one tackles and the solution it provides. |  Building AI-Driven Anomaly Detection Model to Secure Industrial Automation Building AI-Driven Anomaly Detection Model to Secure Industrial Automation Aug 26th 2025, 18:00 by Tanu Jain Introduction In modern industrial automation, security is a primary requirement to keep the regular operation of industrial connected devices without disruption. However, the rise of cyber risks also significantly impacts the industry's sustainable operation. The evolving cyberattacks can affect the overall industrial systems that control industrial processes and systems. Modern attacks are more targeted and designed to evade detection by traditional defensive approaches. A proactive approach is necessary, rather than a defensive strategy, to tackle these evolving cyber threats. This article presents a use case for building an anomaly detection framework using artificial intelligence (AI). More specifically, a hybrid learning model consisting of a deep learning LSTM model for feature extraction and a machine learning (ML) classifier to detect and predict anomalous behavior in industrial automation. The evolution of next-generation technologies, also known as Industry 4.0, has evolved to meet the challenges and requirements of optimal operations and efficient sustainability in industrial automation networks. In this modern era, the development of advanced mobile networks (5G), big data analytics, the Internet of Things (IoT), and Artificial Intelligence (AI) provides excellent opportunities for better and more optimal industrial operations. The integration of Mobile Network, for example, enables the seamless operation of millions of IIoT devices connected simultaneously with minimal bandwidth and low latency. However, apart from excellent opportunities, these technological paradigms also open a new door to cyber-criminals that can affect the sustainability and operations of industrial networks. |  Oracle Standard Edition vs PostgreSQL (Open Source): Performance Benchmarking for Cost-Conscious Teams Oracle Standard Edition vs PostgreSQL (Open Source): Performance Benchmarking for Cost-Conscious Teams Aug 26th 2025, 17:00 by Rishu Chadha Relational databases sit at the core of nearly every application stack—powering everything from customer transactions to critical business reporting. Choosing the right database is no small decision: it influences application performance, scalability, maintenance overhead, and ultimately, total cost of ownership. Two of the most popular contenders in the OLTP (Online Transaction Processing) space are Oracle Standard Edition (SE) and PostgreSQL (open source). Oracle SE has a long-standing reputation in the enterprise world for its transactional integrity, advanced concurrency controls, and rock-solid durability. It's a go-to choice for industries that demand reliability, like finance, healthcare, and manufacturing. On the other hand, PostgreSQL has emerged as a developer favorite in recent years, celebrated for its performance, extensibility, and, of course, its open-source licensing model that removes vendor lock-in. |  A Comparative Analysis of GitHub Copilot and Copilot Agent: Architectures, Capabilities, and Impact in Software Development A Comparative Analysis of GitHub Copilot and Copilot Agent: Architectures, Capabilities, and Impact in Software Development Aug 26th 2025, 16:00 by Devdas Gupta Artificial intelligence (AI) is rapidly reshaping how software is built, tested, and maintained. GitHub Copilot leads this shift as a smart coding assistant that suggests real-time code completions by learning from billions of lines of public code. As the complexity of development work continues to grow, the need for an AI tool that extends beyond code completion will arise. Enter GitHub Copilot Agent, a more autonomous assistant that can comprehend natural language, traverse multiple project files, and perform more advanced development tasks such as refactoring, debugging, and generating unit tests. | |

Comments

Post a Comment